Today, Google gave select Pixel ‘superfans’ a peek into Bard, its ChatGPT rival. Much like its competitors, Bard offers users a blank text box and an invitation to ask questions about any topic they like. Even much more like its competitors, the chatbot offers not-so-accurate answers and has a tendency to invent information. This is why Google already clarified that Bard is not a replacement for its search engine but, rather, a “complement to search” — a bot that users can bounce ideas off of, generate writing drafts, or just chat about life with.

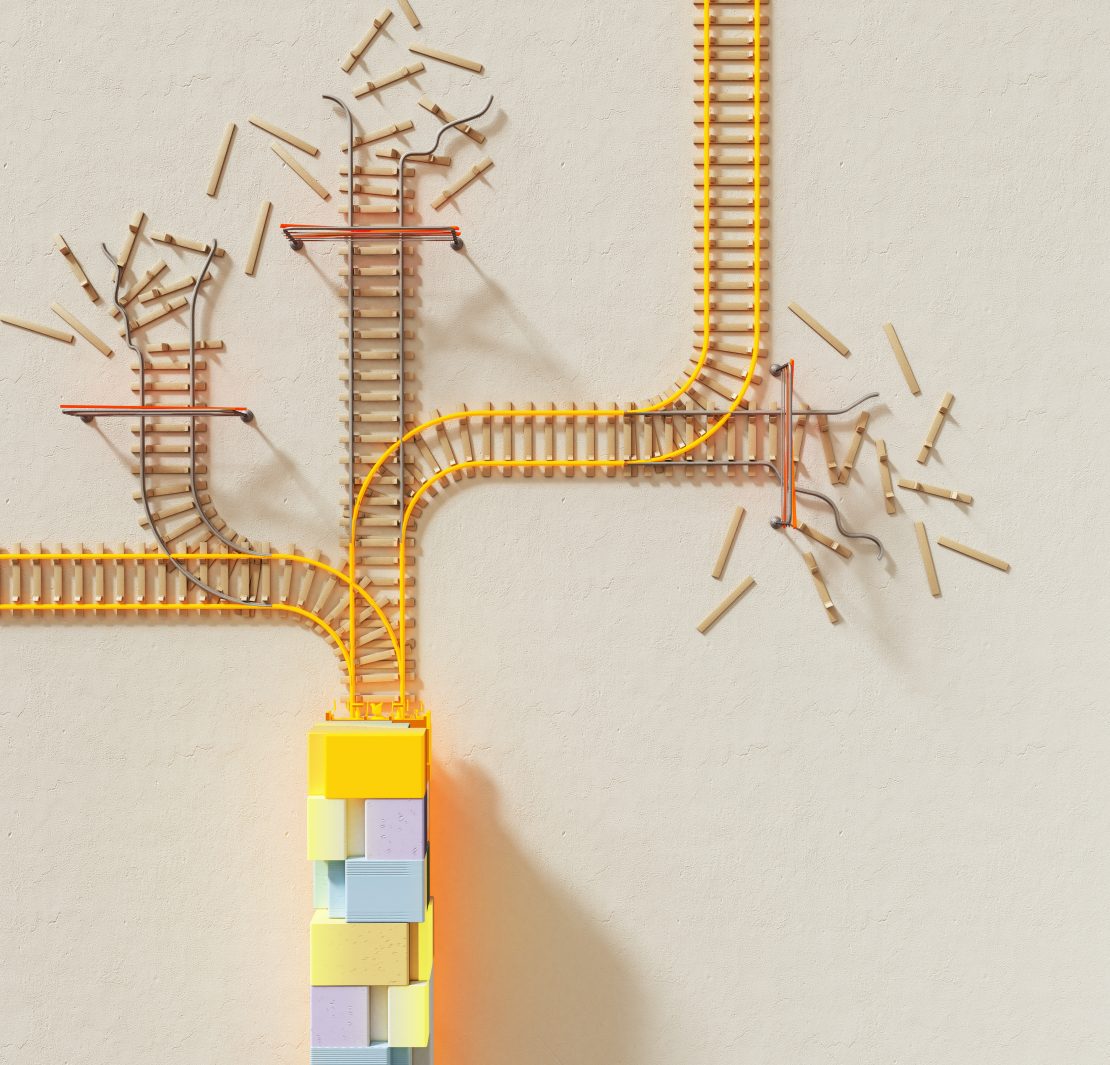

Despite this lack of efficiency, Google practically rushed to introduce its AI ChatBot to the market, as it struggled to keep up with the sudden popularity of its rivals. Google announced Bard last month in a bid to get ahead of Microsoft, which took the wraps off of its ChatGPT-powered Bing just a day later. And it was quite obvious that the tech giant wasn’t entirely prepared for it, apparently leaving a lot of Googlers upset. Even Microsoft’s Bing AI faced tonnes of criticisms and was the butt of Twitter jokes when it launched.

Last year, the integration of AI into our regular internet usage kicked off an AI race between the tech giants that we can’t undo. AI is everywhere. AI translation has improved to the point that it is on the verge of eliminating language barriers on the internet among the most widely spoken languages. College teachers are ripping their hair out because AI text generators can now write essays as well as the average undergraduate, making it possible to cheat in ways that no plagiarism detection can detect. Copilot, a new tool that employs machine learning to predict and complete lines of computer code, is taking us one step closer to the potential of an AI system that can write itself. AI can now write books, create portraits from scratch and do just about everything in between.

It’s a common point of anxiety in the tech world, “if we don’t build it, someone else will”. Tech companies are diving headfirst into the race so they don’t fall behind, even if they’re not entirely sure what they’re even doing. There is no time for scientists to take a step back and breath. And some experts believe that this constant hurry to be the first might not end well for anyone.

First, there’s the whole “AI could wipe out humanity” mess, which, while not in our immediate future, could totally happen sometime in the future. Especially if we keep going the way we are. Experts are already afraid of what they have created. There are risks of overoptimization, weaponization, and ecological collapse. Basically, the scientists creating these AIs themselves risk losing control over it- and this isn’t just the plot of a sci-fi movie anymore.

For the longest time, AI safety was a problem that didn’t really exist. We spent all these years merrily, thinking we still had at least a few more decades before we had to worry about AI doom. But in less than a year, the problem is now a reality, and researchers are scrambling to find solutions. It’s too late to rewind any of this now, but maybe it’s time to stop and smell the roses- for our own sake.