More than one person out of every ten has a mental disorder. And only a fraction of those have proper access to the care that they need.

So what about the people that don’t have the time, money or resources to heal? Some of them seem to have found comfort in online therapy. AI-powered Chatbots and free resources seem to be replacing in-person therapy over time. But whether or not it’s a permanent solution is up for debate.

Psychotherapists have been adapting AI for mental health since the 1960s, and now, conversational AI has become much more advanced and ubiquitous, with the chatbot market forecast to reach US$1.25 billion by 2025.

Replika is one such service, a US chatbot app that offers users an “AI companion who cares, always here to listen and talk, always on your side”. Computer programmer Eugenia Kuyda launched the app in 2017, which now has more than two million active users. Each has a chatbot or “replika” unique to them, as the AI learns from their conversations. Users can also design a cartoon avatar for their chatbot. Ms Kuyda told BBC that people using the app range from autistic children who turn to it as a way to “warm up before human interactions”, to adults who are simply lonely and need a friend. Others are said to use Replika to practise for job interviews, to talk about politics, or even as a marriage counsellor.

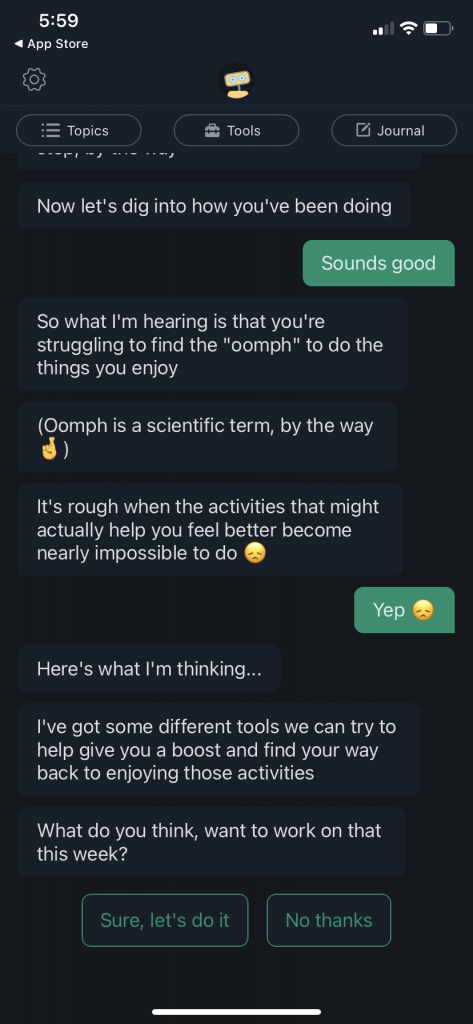

Woebot is another such app that offers one such chatbot. Psychologist and technologist Alison Darcy launched it in 2017. It’s one of the very few apps I could find that comes with no surprise in-app purchases and limited offers.

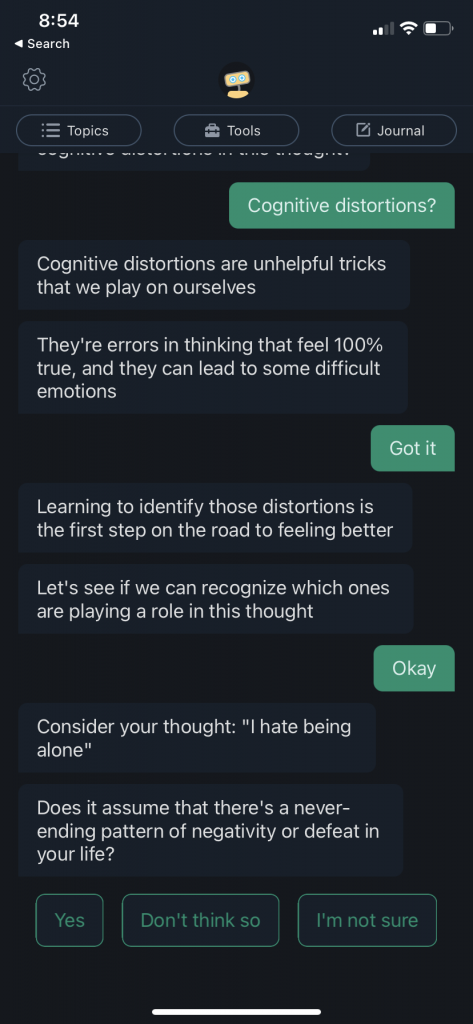

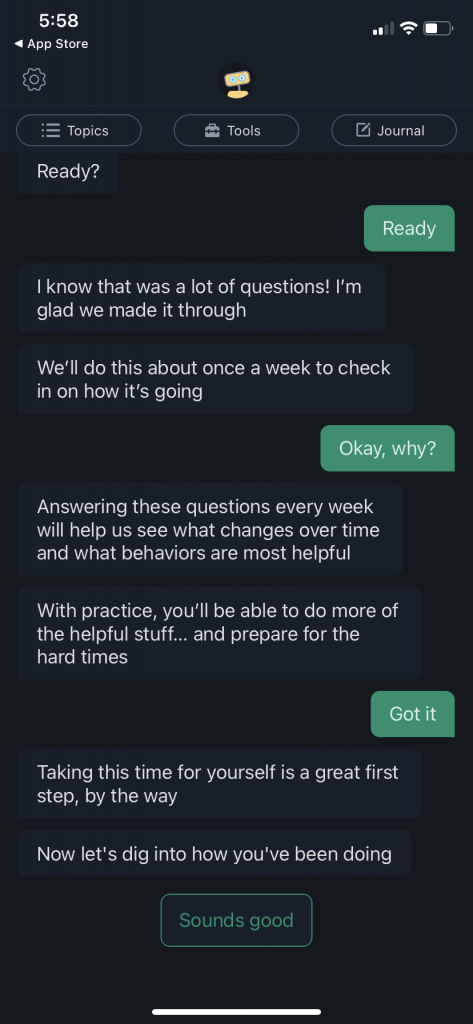

It’s a pretty simple concept: this ‘woebot’ will ask you certain questions about your mood and help you find temporary solutions to navigate your feelings. Most of the time you answer through already-given prompts rather than have a conversation with this bot. ‘It’ (?) speaks a lot like an actual therapist is forcibly trapped inside my phone and brainwashed to serve me and only me. Tons of fake attempts at sympathising and empathising, and the bot almost does a good job.

After a few questions about my current feelings and moods, the bot suggested I do something to get moving and somehow I ended up dancing alone in my bedroom to the Belgian techno anthem of the 1980s, Pump Up The Jam. And you know what: it actually felt good.

As is common with in-person therapy, the bot will not suggest, advise or tell you exactly what to do. With keywords like ‘consider’, ‘let’s see’ and ‘perhaps’, it will help you as you figure out the solution yourself. And you also end up learning new words for things you’re feeling. At the very least, this app and a few similar ones will help you work through your negative thought patterns (or cognitive distortions, as I’ve learned) and provide temporary and immediate comfort, when your therapist can’t.

Some of these apps also have features to help you meditate, and community chat options with which you can interact with other users. A lot of the ‘legitimate’ chatbot apps I could find were either not available in my region or were blocked by paywalls after certain limited access. There’s clearly a disparity in the online resources available, but we’re slowly opening up borders and getting somewhere.

There are also certain risks to consider. Just 3 days ago, a man in Belgium reportedly died by suicide after conversing with an AI chatbot about climate change, according to the man’s widow.

“Without Eliza [the chatbot], he would still be here,” the widow, told the Belgian outlet La Libre. Pierre’s widow showed La Libre messages between the man and the bot, known as “Eliza,” which allegedly showed that the husband appeared to treat the bot as a human and that their messages became more alarming over six weeks before his death. The man suffered from ‘eco-anxiety’, a heightened form of worry surrounding environmental issues, and allegedly turned to the chatbot for comfort, which proved fatal. According to Vice, the app currently has 5 million users and was trained with the “largest conversational dataset in the world.” Most of these apps aim to provide you with a mental well-being companion. But with the latest controversies surrounding the development of AI, it seems safer to keep one foot out of the door with these things.

Ultimately, given the recent state of the world, the climate and the economy- online chatbot therapy holds promise. So long as we limit technology’s data supply when it’s being used in health care, AI chatbots will only be as powerful as the words of the mental healthcare professionals they parrot. It might work as a temporary solution for many, but I’m not so sure it’s worth firing your therapist over.