Notice: Undefined property: stdClass::$error in /var/www/html/wp-content/themes/theissue/inc/misc.php on line 71

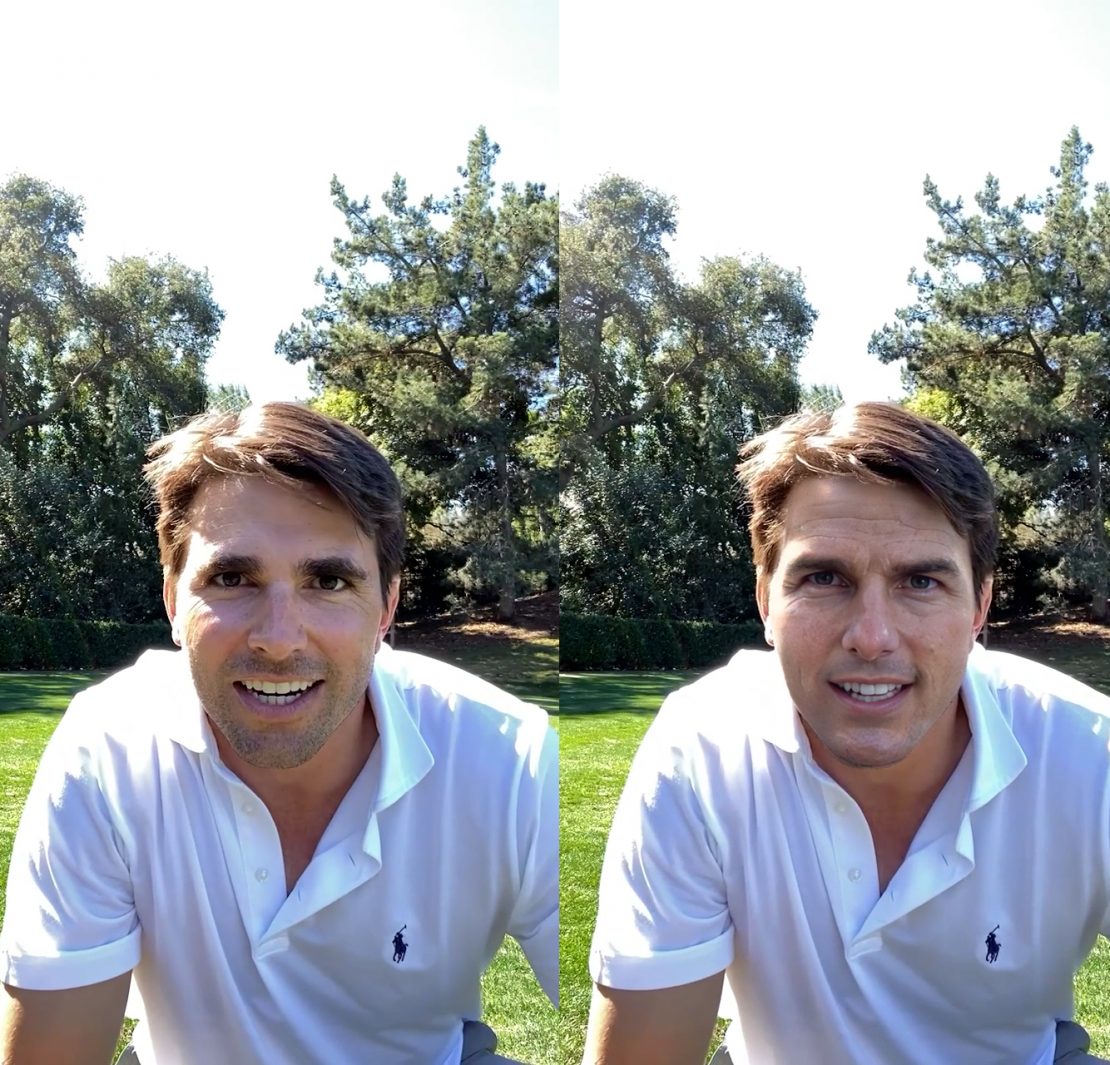

Earlier in 2021, I came across a TikTok video of Tom Cruise, while casually scrolling through reels. You’d expect him to be either standing on planes or crashing his bike through buildings for his next action venture. Instead, it was a video of him performing a coin trick, that left me confused in more ways than one. “The pandemic must have hit him hard”, I thought.

Over the next week, videos of the actor doing very out-of-character things started popping up on my ‘explore’ page. In one video, you can see him goofing around in an upscale men’s clothing store. In another, he growls playfully during a short rendition of Dave Matthews Band’s “Crash Into Me.”

If you’re even remotely a fan of the Mission Impossible franchise, those videos probably raised red flags. The chad-like Tom Cruise in those videos was in fact, not the real Maverick actor at all, nor were they in any way related.

The 10 videos, posted between February and June 2021, featured an artificial intelligence-generated doppelganger meant to look and sound like him. They were ‘deepfakes’, a combination of the terms “deep learning” and “fake”. These are digitally manipulated videos, where people’s faces or voices can be switched out.

Visual and AI effects artist Chris Umé created the Tom Cruise videos with the help of a Cruise stand-in, actor Miles Fisher. Ume’s venture became so popular, it inspired him to join up with others to launch a company called Metaphysic in June 2021. It uses the same deep fake technology to make otherwise impossible ads and restore old film. Metaphysic’s deepfake projects for clients have included a Gillette razor campaign that recreated a young Deion Sanders along with his 1989 draft-day look and a campaign for the Belgian Football Association that brought two deceased Belgium team managers back to life.

Deepfakes are scarily accurate and realistic. Unfortunately, though, this comes with its own downsides. Many people misuse this technology to make nonconsensual pornography featuring the faces of celebrities, influencers or any person, including children. And you don’t need to be a ‘tech guru’ to access it either. Just over the last two days, hundreds of sexual deepfake ads using Emma Watson’s face ran on Facebook and Instagram in the last two days. The ads were part of a massive campaign this week for a deepfake app, which allows users to swap any face into any video of their choosing without much effort.

This is where this technology becomes extremely dangerous, and the irreversible effects of that are already visible. Twitch became the latest media platform to ban deepfakes yesterday, making it crystal clear that promoting, creating or sharing it is grounds for indefinite suspension. This comes not long after the platform had its own scandal last month, as Buzzfeed reported.

On January 30th, Twitch streamer Brandon “Atrioc” Ewing left a browser window open on stream that reportedly showed the faces of popular female Twitch streamers, including Pokimane, QTCinderella, and Maya Higa, “grafted onto the bodies of naked women,”. In a tearful apology stream, Atrioc admitted he visited a deepfake site out of “morbid curiosity” about the images. “I just clicked a fucking link at 2AM, and the morals didn’t catch up to me,” he said while promising never to do anything like that again. But the damage was already done. Screenshots of the deepfakes flooded the internet and the victims now had to see their faces and bodies edited to do things they never consented to.

— Maya (@mayahiga) February 1, 2023

I want to scream.

Stop.

Everybody fucking stop. Stop spreading it. Stop advertising it. Stop.

Being seen “naked” against your will should NOT BE A PART OF THIS JOB.Thank you to all the male internet “journalists” reporting on this issue. Fucking losers @HUN2R

— QTCinderella (@qtcinderella) January 30, 2023

And it’s only getting worse. NBC News reported that the number of deepfake pornographic videos has nearly doubled every year since 2018. The technology itself is not harmful, but in the wrong hands, it can even disrupt political elections.

The law isn’t of much help either. According to the Cyber Civil Rights Initiative, only four US states have deepfake laws that aren’t specific to political elections. The UK, EU, and China are looking into crackdowns, too. In India, we don’t have any laws specifically for deep fake cybercrime, but various other laws can be combined to deal with it.

From reddit posts asking whether it’s okay to deepfake your crush into videos, to commenters agreeing that celebrities are ‘fair game’ when it comes to this, the increasing use of this technology is very concerning. Even though major platforms like Meta and now Twitch have banned it, there’s no guarantee that they won’t show up on other sites on the world wide web. Until then, we just have to live knowing that anyone with a smartphone and malicious intent can create something so vile, and possibly get away with it.